Maximum Likelihood Estimation

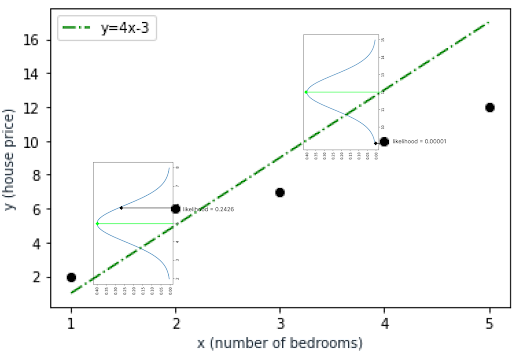

Maximum likelihood estimation (MLE) is a widely used statistical technique for estimating the parameters of a model given a set of observed data. The goal of MLE is to find the values of the model parameters that maximize the likelihood of observing the data, which is equivalent to finding the parameters that make the data most probable. This approach is based on the idea that the observed data is a realization of a random process, and the model parameters are the underlying factors that generate this process.

Introduction to Maximum Likelihood Estimation

In statistics, the likelihood function is a mathematical function that describes the probability of observing the data given a set of model parameters. The likelihood function is typically denoted as L(θ|x), where θ represents the model parameters and x represents the observed data. The maximum likelihood estimate (MLE) of the model parameters is the value of θ that maximizes the likelihood function. In other words, the MLE is the value of θ that makes the observed data most likely.

The MLE approach is based on several key assumptions, including the assumption that the data is independent and identically distributed (i.i.d.) and that the model is correctly specified. The MLE approach also requires the existence of a likelihood function, which may not always be the case. However, when these assumptions are met, the MLE approach provides a powerful and flexible framework for estimating model parameters.

Key Concepts in Maximum Likelihood Estimation

There are several key concepts in MLE, including the likelihood function, the log-likelihood function, and the maximum likelihood estimate. The likelihood function, as mentioned earlier, describes the probability of observing the data given a set of model parameters. The log-likelihood function is the logarithm of the likelihood function, which is often used in practice because it is easier to work with. The maximum likelihood estimate is the value of the model parameters that maximizes the likelihood function or the log-likelihood function.

Another important concept in MLE is the likelihood ratio test, which is a statistical test used to compare the fit of two different models. The likelihood ratio test is based on the ratio of the likelihood functions of the two models, and it can be used to determine whether one model is significantly better than another. The Akaike information criterion (AIC) and the Bayesian information criterion (BIC) are also commonly used in MLE to compare the fit of different models.

| MLE Concept | Description |

|---|---|

| Likelihood Function | Describes the probability of observing the data given a set of model parameters |

| Log-Likelihood Function | The logarithm of the likelihood function |

| Maximum Likelihood Estimate | The value of the model parameters that maximizes the likelihood function or the log-likelihood function |

| Likelihood Ratio Test | A statistical test used to compare the fit of two different models |

| Akaike Information Criterion (AIC) | A measure of the relative quality of a model |

| Bayesian Information Criterion (BIC) | A measure of the relative quality of a model |

Applications of Maximum Likelihood Estimation

MLE has a wide range of applications in statistics, engineering, and other fields. Some examples of applications include linear regression, logistic regression, and time series analysis. In linear regression, MLE is used to estimate the coefficients of the regression equation, while in logistic regression, MLE is used to estimate the probabilities of different outcomes. In time series analysis, MLE is used to estimate the parameters of models such as ARIMA and GARCH.

MLE is also used in machine learning and data science to estimate the parameters of complex models such as neural networks and decision trees. In these applications, MLE is often used in conjunction with other techniques such as cross-validation and regularization to improve the accuracy and robustness of the models.

Advantages and Limitations of Maximum Likelihood Estimation

MLE has several advantages, including its ability to handle complex models and large datasets. MLE is also a flexible approach that can be used with a wide range of distributions and models. However, MLE also has several limitations, including its sensitivity to the choice of model and the presence of outliers in the data. MLE can also be computationally intensive, especially for large datasets.

Some of the key advantages of MLE include:

- Flexibility: MLE can be used with a wide range of distributions and models

- Ability to handle complex models: MLE can be used to estimate the parameters of complex models such as neural networks and decision trees

- Ability to handle large datasets: MLE can be used to estimate the parameters of models using large datasets

Some of the key limitations of MLE include:

- Sensitivity to the choice of model: MLE can be sensitive to the choice of model, and the results can be affected by the choice of model

- Presence of outliers in the data: MLE can be affected by the presence of outliers in the data, and the results can be biased

- Computational intensity: MLE can be computationally intensive, especially for large datasets

What is the difference between the likelihood function and the log-likelihood function?

+The likelihood function describes the probability of observing the data given a set of model parameters, while the log-likelihood function is the logarithm of the likelihood function. The log-likelihood function is often used in practice because it is easier to work with.

What is the purpose of the likelihood ratio test?

+The likelihood ratio test is a statistical test used to compare the fit of two different models. The test is based on the ratio of the likelihood functions of the two models, and it can be used to determine whether one model is significantly better than another.

What are some of the advantages and limitations of MLE?

+MLE has several advantages, including its ability to handle complex models and large datasets. MLE is also a flexible approach that can be used with a wide range of distributions and models. However, MLE also has several limitations, including its sensitivity to the choice of model and the presence of outliers in the data. MLE can also be computationally intensive, especially for large datasets.